LERa is a robust and adaptable solution for error-aware task execution in robotics.

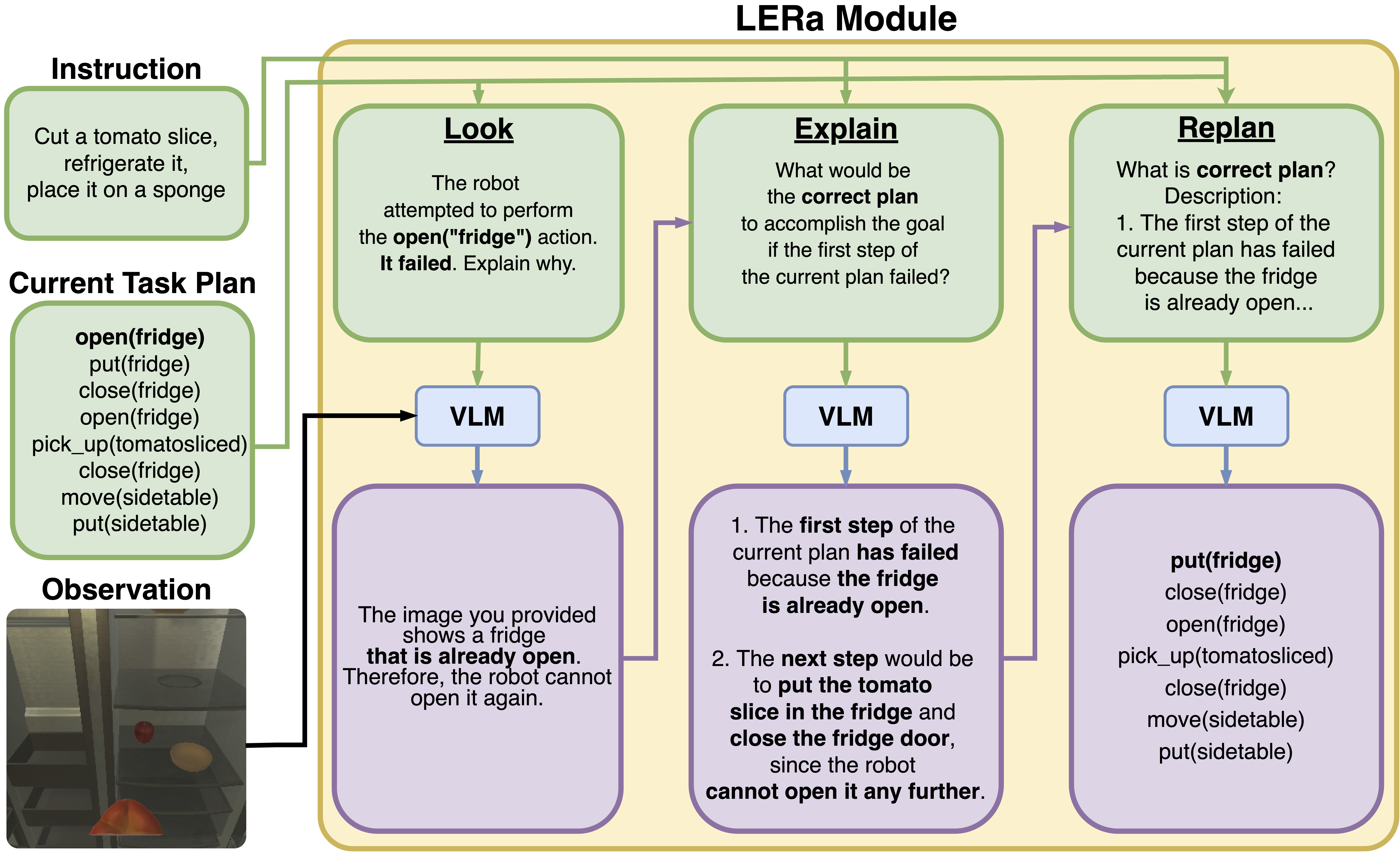

Large Language Models are increasingly used in robotics for task planning, but their reliance on textual inputs limits their adaptability to real-world changes and failures. To address these challenges, we propose LERa — Look, Explain, Replan — a Visual Language Model-based replanning approach that utilizes visual feedback. Unlike existing methods, LERa requires only a raw RGB image, a natural language instruction, an initial task plan, and failure detection—without additional information such as object detection or predefined conditions that may be unavailable in a given scenario. The replanning process consists of three steps: (i) Look, where LERa generates a scene description and identifies errors; (ii) Explain, where it provides corrective guidance; and (iii) Replan, where it modifies the plan accordingly. LERa is adaptable to various agent architectures and can handle errors from both dynamic scene changes and task execution failures. We evaluate LERa on the newly introduced ALFRED-ChaOS and VirtualHome-ChaOS datasets, achieving a 40% improvement over baselines in dynamic environments. In tabletop manipulation tasks with a predefined probability of task failure within the PyBullet simulator, LERa improves success rates by up to 67%. Further experiments, including real-world trials with a tabletop manipulator robot, confirm LERa's effectiveness in replanning. We demonstrate that LERa is a robust and adaptable solution for error-aware task execution in robotics.

| Agent | ALFRED-ChaOS (Seen) | ALFRED-ChaOS (Unseen) | VirtualHome-ChaOS | PyBullet (gpt4o) | PyBullet (Gemini) | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| SR↑ | GCR↑ | SRep↑ | SR↑ | GCR↑ | SRep↑ | SR↑ | GCR↑ | SRep↑ | SR↑ | GCR↑ | SRep↑ | SR↑ | GCR↑ | SRep↑ | |

| Oracle | 33.04 | 50.04 | - | 31.65 | 51.71 | - | 50.00 | 85.75 | - | 19.00 | 32.50 | - | 19.00 | 32.50 | - |

| O-Ra | 34.38 | 51.19 | 7.69 | 34.17 | 54.08 | 14.16 | 50.00 | 84.33 | 0.00 | 53.00 | 61.58 | 39.13 | 56.00 | 73.67 | 46.39 |

| O-ERa | 40.18 | 56.40 | 37.08 | 42.81 | 61.81 | 33.33 | 50.00 | 85.92 | 0.00 | 75.00 | 80.50 | 71.24 | 72.00 | 78.25 | 65.65 |

| O-LRa | 34.38 | 51.00 | 6.73 | 33.45 | 53.57 | 11.01 | 93.00 | 97.04 | 87.00 | 79.00 | 83.33 | 73.71 | 87.00 | 92.92 | 85.81 |

| Baseline | 33.04 | 50.15 | 3.15 | 32.01 | 51.89 | 0.98 | 52.00 | 85.91 | 4.10 | 67.00 | 74.92 | 59.06 | 82.00 | 89.08 | 78.90 |

| O-LERa | 49.55 | 64.55 | 73.39 | 53.60 | 70.23 | 74.57 | 94.06 | 98.17 | 95.03 | 67.00 | 72.67 | 61.29 | 86.00 | 89.17 | 84.83 |

| VLM | ALFRED-ChaOS (Seen) | ALFRED-ChaOS (Unseen) | VirtualHome-ChaOS | PyBullet | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| SR↑ | GCR↑ | SRep↑ | SR↑ | GCR↑ | SRep↑ | SR↑ | GCR↑ | SRep↑ | SR↑ | GCR↑ | SRep↑ | |

| LLaMA-3.2-11b | 35.71 | 52.01 | 11.11 | 33.81 | 53.81 | 9.38 | 52.00 | 74.17 | 20.00 | 34.00 | 45.92 | 20.54 |

| LLaMA-3.2-90b | 38.84 | 54.80 | 30.58 | 36.33 | 55.97 | 25.93 | 54.00 | 84.25 | 8.00 | 64.00 | 71.08 | 57.68 |

| Gemini-Flash-1.5 | 46.43 | 61.61 | 67.22 | 51.44 | 68.38 | 67.71 | 59.40 | 72.51 | 24.00 | 55.00 | 66.33 | 46.75 |

| Gemini-Pro-1.5 | 42.19 | 56.51 | 56.16 | 46.40 | 63.85 | 55.64 | 65.35 | 87.87 | 41.58 | 86.00 | 89.17 | 84.83 |

| gpt-4o-mini | 43.75 | 59.71 | 46.81 | 46.04 | 63.97 | 49.12 | 74.25 | 82.50 | 56.25 | 48.00 | 62.92 | 37.75 |

| gpt-4o | 49.55 | 64.55 | 73.39 | 53.60 | 70.23 | 74.57 | 94.06 | 98.17 | 95.03 | 67.00 | 72.67 | 61.29 |

| Agent | Seen split | Unseen split | ||||

|---|---|---|---|---|---|---|

| SR↑ | GSR↑ | SRep↑ | SR↑ | GSR↑ | SRep↑ | |

| O-15 | 12.50 | 35.90 | - | 12.95 | 39.75 | - |

| O-10 | 17.41 | 40.89 | - | 17.63 | 43.38 | - |

| O-05 | 24.55 | 45.01 | - | 24.82 | 47.54 | - |

| O-FC | 33.04 | 50.04 | - | 31.65 | 51.71 | - |

| O-L-15 | 22.32 | 44.42 | 35.48 | 20.50 | 45.29 | 25.00 |

| O-L-10 | 25.45 | 46.91 | 47.32 | 25.18 | 48.95 | 28.70 |

| O-L-05 | 34.38 | 52.57 | 54.16 | 35.97 | 55.76 | 35.43 |

| O-L-FC | 44.64 | 59.00 | 52.83 | 46.76 | 63.46 | 49.34 |

@inproceedings{patratskiy2025lera,

title={LERa: Replanning with Visual Feedback in Instruction Following},

author={Pchelintsev, Svyatoslav and Patratskiy, Maxim and Onishchenko, Anatoly and Korchemnyi, Alexandr and Medvedev, Aleksandr and Vinogradova, Uliana and Galuzinsky, Ilya and Postnikov, Aleksey and Kovalev, Alexey K. and Panov, Aleksandr I.},

booktitle={IROS 2025},

year={2025}

}